Elon Musk talked on a Twitter space on July 12, 2023. Here’s what he said about his new company, xAI, AI regulation, the importance of insight followed by oversight, China’s reasons to regulate AI, “Team Humanity.” He also gives advice for young people (or anyone for that matter) as we enter into a new era and then discusses the singularity. Elon ends the discussion on a positive note, explaining why we should be optimistic about the future.

xAI

“I think I have been banging the drum on AI safety now for a long time. If I could press pause on AI or advanced AI digital superintelligence, I would. It doesn’t seem like that is realistic.

“So xAI is essentially going to build an AI, you know, you’ve got to grow an AI in a good way, hopefully.

“The premise of an AI is to sort of have an AI that is maximally curious, maximally truth-seeking, and, this may get a little esoteric here, but I think that a curious AI, one that is trying to understand the universe, I think I want it to be pro-humanity from the standpoint that humanity is just so much more interesting than not-humanity.

“Obviously, I’m a big fan of Mars and that we should become a spacefaring, civilization, and a multi-planet species, but Mars is quite frankly boring relative to Earth. It’s a bunch of rocks, and there’s no life that we’ve detected, not even microbial life.

But Earth, with the vast complexity of life that exists, is vastly more interesting than Mars.

“You just learn a lot more with humanity being there, and, I think fostering humanity, if you are trying to understand the true nature of the universe, that’s the best thing that I can come up with from an AI safety standpoint.

“I think this is better than trying to explicitly program morality into AI. because if you program in a certain morality, you have to say well what morality are you programming? Who’s making those decisions? And even if you are extremely good with how you program morality, there’s still a morality, inversion problem. This is sometimes called the Waluigi problem, which is if you program Luigi, you inherently get Waluigi by inverting Luigi.

Haha, to use Super Mario metaphors. I mean this is starting to get quite esoteric, but hopefully, this makes some sense.

Who is Waluigi? For some background, Waluigi is a fictional character in the Mario franchise. He plays the role of Luigi’s arch-rival and accompanies Wario in spin-offs from the main Mario series, often for the sake of causing mischief and problems. Interestingly, Elon played the role of Wario in a SNL skit in May 2021. Wario, similar to Waluigi, was designed to be an arch-rival to Mario, an anti-hero or an antagonist.

Elon continued,

“So, I would be a little concerned about the way AI is programming the AI to say that this is good and that’s not good. xAI is really just kind of starting out here, it will be a while before it’s relevant on the scale of OpenAI Microsoft AI or Google Deep Mind AI. Those are really the two big gorillas in the Ai right now by far.

“I could talk about this for a long time, it’s something that I’ve thought about for a really long time and actually was somewhat reluctant to do anything in this space because I am concerned about the immense power of a digital superintelligence. It’s something that, I think is maybe hard for us to even comprehend.

“Even if AI is extremely benign, the question of relevance, perhaps, comes up. If it can do anything better than any human, what’s the point of existing? That is also an issue? Do we even have relevance in such a scenario? That’s the bad side of it. The good side, obviously, is that in an AI future where you really will have (in a benign scenario) an age of plenty where, really, there will be no shortage of goods and services. Any scarcity will be simply scarcity that we self-define as scarcity. It could be a unique piece of art or a house in a specific location. It’s artificially defined scarcity but goods and services will not be scarce in a positive AGI future.

But I think it’s also important for us to worry about a terminator future in order to avoid a terminator future.

AI REGULATION

Elon also spoke about the critical nature of AI regulation,

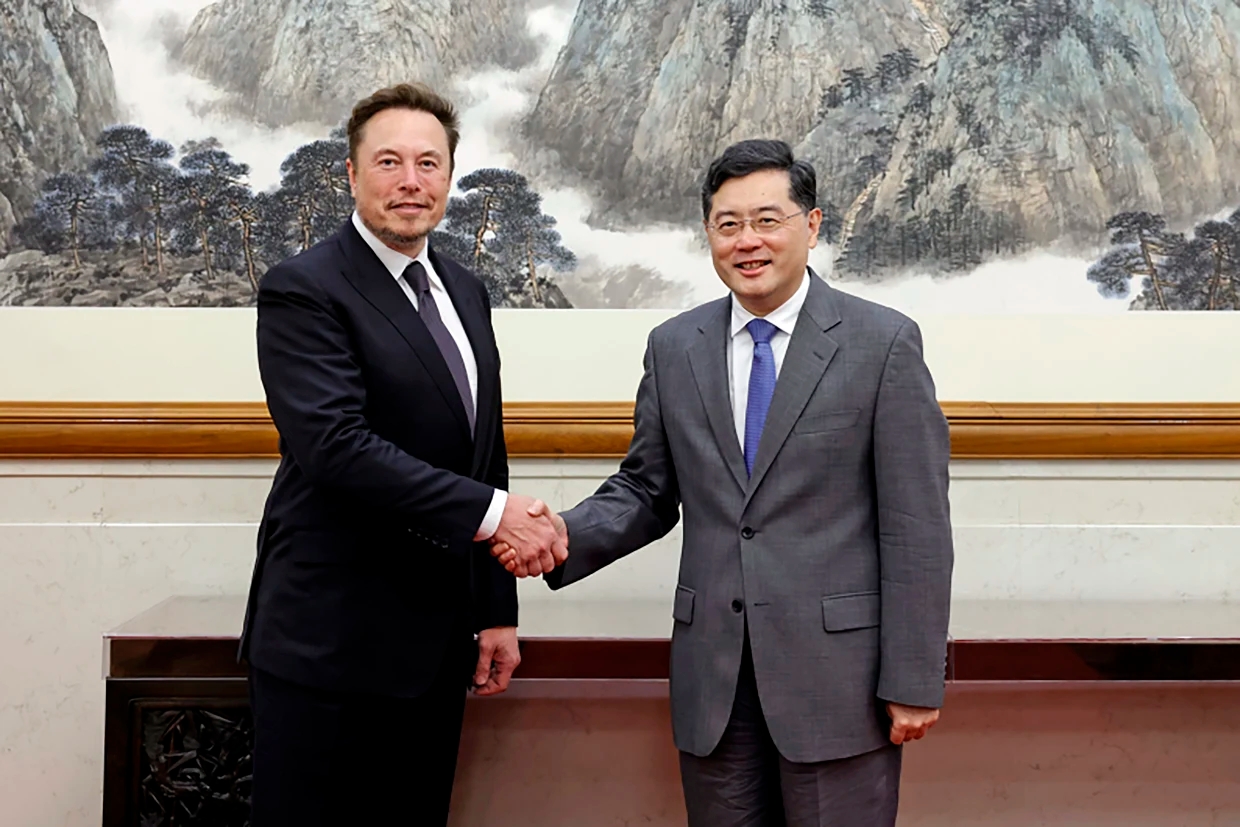

“And I am an advocate of having some sort of regulatory oversight and I’ve actually made this point throughout the world, meeting with world leaders including in China where there is actually strong agreement that there should be AI oversight, AI regulation.

“Just as we have regulations for nuclear technology, you can’t just go make a nuke in your garage, and everyone thinks that’s cool, we don’t think that’s cool. There’s a lot of regulation around things that we think are dangerous. And even if things are not dangerous at a civilizational level, we have the FDA, we have the FAA and the DOT. There are all these regulatory authorities that we put in place to ensure public safety at an individual level but AGI is just one of those things that is potentially dangerous at a civilizational level not just at an individual level. That’s why we want to have AI regulation.

“We want to be careful in how the AI regulation is implemented, not be precipitous or heavy-handed. But there has to be some kind of referee on the field here. One of the dangers is that companies race ahead to… I think it’s actually more dangerous for companies that are behind that might take shortcuts that could be dangerous.

“You know, the FAA came into being after lots of people died in aircraft crashes and they were like, If you want to make aircraft you cannot cut corners because people are going to die. So, that’s kind of how I see AI regulation. I know a lot of people are against it but I think its the kind of thing that we should do, we should do it carefully, we should do it thoughtfully.

Elon paused here while Ro Khanna, a U.S. representative from California and Mike Gallagher U.S. representative for Wisconsin spoke. In response to their conversation, Elon added,

“It’s difficult to think of, I can’t think of a good movie or TV example of Ai that’s the benign scenario. There are some books. The Ian Banks Culture books are the best imagining of a positive AI future that I’ve read. I think the Isaac Asimov Foundation series books have somewhat of a benign AI center (the TV series divulges quite far from the books). But the most sophisticated or perhaps the most accurate view of an AI future is the Ian Banks Culture books which I highly recommend. It would be helpful for Hollywood to articulate that vision in a way that the public can understand.

INSIGHT, FOLLOWED BY OVERSIGHT

Elon said

“I think the right sequence to go with here is insight, followed by oversight. At first, it’s really just for the government to try to understand what’s going on and I think there’s some merit to an industry group, like the Motion Picture Association that I think actually should be formed so I think we’ll try to take some steps in that direction because there’s some amount of self-regulation that I think can be good here.

CHINA WILL REGULATE AI

Elon Musk is generally pro-China, he thinks China is underrated and he truly agrees the people are China are really wonderful. When he was in China, he experienced a lot of positive energy there and he noticed the Chinese people generally want the same things that people in America want. He admits there are many political challenges. He praises China for how much they have accomplished to further the electrification of vehicles and implement solar and wind power. He spoke about his trip to China,

“When I was on my recent trip to China, I did spend a fair amount of time with the Senior leadership there, talking about AI safety and some other potential dangers and pointing out that if a digital superintelligence is created that that could very well be in charge of China instead of the Chinese communist party. I think that did resonate. No government wants to find itself unseated by a digital superintelligence. So I think they actually are taking action on the regulatory front and are concerned about this as a risk and I’ve seen some comments internally within China that the companies are a bit unhappy about the government wanting to put regulatory oversight on AI. So this is something that does actually resonate even in China because when I was in China I said one of the biggest obstacles to AI regulation outside of China is the concern that China will not regulate AI and then will get ahead and they took that point to heart, it’s a logical point, I think. And I highlighted that, if you make superintelligence, the superintelligence could actually run China and that also resonated.

“So I think, try to shed as much light on this subject. Sunlight is the best disinfectant. Be as open about things as possible, going from insight for a few years to oversight with consultation with the industry, is the sensible approach.

“I think the public is starting to understand the potential of AI with ChatGPT, something the public can interact with. I’ve understood the power of AI for a while, and until you have some sort of easy to use interface it’s difficult for the public to understand. It’s also the case with stable diffusion and Midjourney, you can see the incredible art that AI can create, it’s really amazing.

“I’m actually somewhat of an optimist in general, but like I said, the best way to ensure a good future is to worry about a bad one. So I think that’s the sensible thing to do, and to have discussions like this, and continue to have discussions like this.

TEAM HUMANITY

Elon spoke about how easy it is to demonize an organization or a person if you have never met them in person, saying, “When you meet with them you’re like, well, there’s not that bad! You can understand where they’re coming from and at the end of the day, we’re all part of Team Humanity, hopefully! I think we should all aspire to be part of Team Humanity! We’ve got one planet only, so far, and we don’t want to lose it. There’s that famous quote – think it might be Einstein but could be one of those internet things where you think its Einstein but its not, where it says, it doesn’t matter how WW3 was fought except that WW4 will be fought with sticks and stones.” Haha, there’s not going to be anything left! So we really want to aspire to avoid global thermonuclear warfare. We really want to avoid that, Bigtime! Hopefully, we’ll focus on positive things like becoming a spacefaring civilization, becoming a multi-plantary species, hopefully going out there and visiting other star systems, but we may discover many long-dead one-planet civilizations that never got beyond their original planet.

“I do not know with what weapons World War III will be fought, but World War IV will be fought with sticks and stones.” – Albert Einstein.

Elon continued,

“On the xAI front. If I speak to my personal motivations here, is that I’ve always just wondered what is really going on in reality. Are the aliens? Where are they? Like the Fermi paradox, I find to be intriguing and troubling if the standard model of physics is correct, the universe has been around for many billions of years, so why haven’t we seen aliens? Many members of the public are convinced the government is hiding evidence of aliens and I have not seen any evidence of aliens which is a concern. I might feel better if I saw some aliens. I have not seen one shred of evidence of aliens which is a problem. It means that life & consciousness might be incredibly rare. Maybe we are it, at least in this galaxy. The light of consciousness seems to be this tiny candle in a vast darkness and we should do our absolute best to make sure that candle does not go out.”

ELON’S THEORY ABOUT OUTCOMES RELATING TO CHINA – TAIWAN

“The most entertaining outcome is the most likely (as seen by a 3rd party, not the participants. Like, you could be watching a WW1 movie about getting blown to pieces while sipping a soda and eating popcorn. Not so great for those in the movie, but it is entertaining which does suggest it’s probably going to get hot in the Pacific. Hopefully not too hot. But it’s going to get hot. Hopefully, we can get past that and get to a positive situation for the world in the spirit of, aspirationally, we are all on Team Humanity. But it’s going to get spicey. But the most concerning thing is probably the Taiwan question over the next 3 years and the next 3 years after that I’d be surprised if there is not digital superintelligence in roughly the 5 or 6-year timeframe. If this was a Netflix Series, I’d say the season finale would be a showdown between the West and China and the Series finale will be AGI.”

ELON’S ADVICE FOR YOUNG PEOPLE (AND ANYONE)

“If someone is able to contribute to building AI in a positive way, if someone has that technical ability, that is probably the right thing to work on. For your average citizen, I think the future is definitely going to be interesting. Things get very strange in a future where the AI can basically do everything. In the benign scenario, I guess we will look for personal fulfillment in some way. I think between now and then it’s just trying to be useful. On the manufacturing front, I do think we should place much greater weight on the importance of manufacturing. I think things are shifting in that direction. Generally, when somebody asks me for advice, my advice is to try to be as useful as possible. It’s actually quite hard to be useful. If you can be of use to your fellow humans and contribute more than you take then I think that’s a great thing! I have a lot of respect for those who work hard and do make goods, and provide services, in excess of what they take. That is just a fundamentally good thing.

Elon explains the advent of AGI is often referred to as the singularity. A singularity is like a black hole. You just don’t know what happens after that. “We are on the event horizon of the singularity of digital superintelligence.”

One of the most interesting parts of all of history is the time we live in now, and Elon Musk is optimistic, he says,

“I think if I was to assign probabilities, I think it is more likely to be a positive scenario than a bad scenario, it’s just that the bad scenario is not 0% and we want to do everything we can to minimize the probability of a bad outcome with AI. I think it is maybe 70-80% likely to be a good future. Maybe a great future even! I think of the future in probabilities, nothing is for sure. The future is a set of branching probability streams.

What is the Turing Test? The Turing Test is a deceptively simple method of determining whether a machine can demonstrate human intelligence: If a machine can engage in a conversation with a human without being detected as a machine, it has demonstrated human intelligence.

Elon explains he thing we are well past The Turing Test with AI. He says,

“ChatGPT is well past the Turing Test so really we are on our way to digital superintelligence, I think it’s 5 or 6 years away. The definition of superintelligence is that it’s smarter than any human at anything. It’s not necessarily smarter than the sum of all humans, that’s a higher bar, to be smarter than the sum of all humans. Especially given that it’s the sum of all humans that are machine augmented in that we will have computers and phones and software applications. We are already defacto cyborgs, it’s just that the computer is not integrated with us. But one’s phone is already an extension of one’s self. If you leave your phone behind it feels like missing limb syndrome. You’re patting your pockets like -where did my phone go? It’s crazy the degree to which our phone is basically a supercomputer in your pocket. It is an extension of yourself. So there is a higher bar to be smarter than the sum of all humans that are computer augmented”

Elon explained this concept has caused him stress and many sleepless nights. He tries to figure out how we navigate through these facts to the best possible future for humanity as it may be the hardest problem humans have ever faced and it deserves, or rather, it demands our attention. He adds,

“I think ultimately the nation-state battles will seem parochial compared to digital superintelligence. Of all the risks that we face, there are ones that are dangerous at an individual level and dangerous at a state level and there are things dangerous at a civilizational level. Global thermonuclear warfare is dangerous at a civilizational level, some supervirus that has high mortality rates would be dangerous. I think it’s crazy to do gain-of-function research. Gain of function research is like saying – death maximization! Haha, like I don’t know who came up with this gain-of-function model!? Haha. AI is also a civilizational risk, but the thing about AI is that is has the potential to be amazing if it’s done right.

THE FUTURE IS BRIGHT

The hour-long talk ended with Elon Musk reminding listeners that,

“We want to maximize the collective happiness of humanity and the freedom of action of humanity. You want to look forward to the future and say that is the future I want to be a part of! And I’m excited about the future. That’s actually incredibly important in general. I’m actually concerned that there’s a pervasive pessimism in the world about the future and that’s part of what’s leading to a low birthrate in many parts of the world. I advocate for optimism. I think it’s generally better to be optimistic and wrong than pessimistic and right!

“You look up at the night sky, and see all those stars, and I wonder what’s going on up there. Are there alien civilizations? Is there life up there? Hopefully one day we find out!”

Elon Musk

This article by Gail Alfar. Please credit accordingly. Since January 2022, I have been writing and recording many of Elon Musks’ talks on my blog and here in order to preserve his important words in writing. My blog link is on my Twitter bio and I thank you for reading and for your support. You rock and you are part of Team Humanity! Thank you!

,